I joined Codestory as Founding Design Engineer and their first hire, kickstarting my career in AI and a new life in London.

The two (formidable) founders had been building a code editor called Aide – one of the first forks of VSCode – and sidecar, the AI brain. They hired me to spearhead design and develop the product on the editor side.

Building at the frontier

These were the early days of codegen. Nobody really knew which approach would stick, and I was eager to throw some darts myself. I was also ecstatic to hack on VSCode after Ives van Hoorne, the founder of CodeSandbox, brought it to my attention as “one of the most impressive codebases” he had ever read.

When I joined, the founders were cooking a couple of the many features we developed ahead of the competition. They may seem trivial nowadays – like multi-file editing – but they were engineering milestones back then. IDEs like Cursor were just starting out.

We were whipping up prototypes of never-seen-before features every other week. Coming to the office was magical.

Aide autocompletes a verbose VSCode API, and explains a selected code range ang generates some code based on an inline input.

I know that features like and autocomplete and contextual code generation are a given nowadays, but it felt fantastic to hack on VSCode and be among the first in creating AI code editing experiences.

I was tapping into editor internals like AST, LSP, document buffer, source control management, and terminals. We used them as real-time inputs and APIs over a bidirectional streaming RPC layer talking to sidecar, the Rust binary powering agentic chat and code editing.

Working on the IDE changed me as an engineer. I loved diving deep into this massive and neatly organized Typescript codebase. It showed me the power of intentional design. The codebase leverages dependency injection alongside a great layering architecture, allowing to mix and match any part of it while preserving core functionality.

Every week there was the real risk of falling in the rabbit hole of some primitive. In fact, all UI is in VSCode is built imperatively. Even extensions funnel their UI (save for their webviews) through the core layer. This is what keeps everything super snappy (and multi-threading also helps!). This also means that the VSCode team built primitives for just about everything.

At the beginning, this felt a bit daunting. On my first day, it took me the whole day to close a PR to allow users to quick change the reasoning model. But after enough work on the IDE, it clicked, and reshaping big chunks of the codebase became second nature.

Getting outshipped

We did a lot of self-reflection within the team about what went wrong. I won’t go into the details here – in a nutshell, we decided to avoid resolving fundamental points of contention within the team.

I share responsibility for that. I accepted this internal rift and did not speak up. With regards to my role, I believe there is no difference between designing and engineering. When we engineer a new feature, we are already establishing core UX. Going along with poor communication between AI and IDE teams resulted in a subpar UX.

The bitter lesson – being SOTA on SWE bench, and making peace with the chat interface

We all learned important lessons. For me, in particular, it was “the bitter lesson of AI UX”, as Talor, a Design Engineer at Replit, put it.

In the machine learning sphere, “the bitter lesson” refers to this article by Rich Sutton. In short, it illustrates how scale ends up beating any smart approach. The AI team at Codestory re-learned it when topping the SWE benchmark, twice.

For codegen UX, the bitter lesson is accepting that the chat interface wins over “smart” approaches. Over-designing the way we serve LLM output to the user has been a big miss on my behalf. LLMs spit language predictions: creating a textual form – as in, a weave of words – is what they’re remarkably good at. We should kinda leave that alone.

Successful companies like Cursor innovated around the chat interface. We also moved everything back to the chat, with bits of specialized UI here and there.

Example of agentic flow, where the agent implements and test a new API endpoint

A more mature version of Aide, where we invested a lot in stability and productivity over UX experimentation.

Visual editor

With that lesson learned, I unlocked energy to focus on other features. For example, I created a visual editor within IDE that allowed users iterate visually, similarly to bolt.new and lovable.dev. It also cut in half the average time from query to completion for visual tasks.

Visual editor within the IDE. The agent is tasked to make visual changes, and take screenshots after every change to validate its work.

The change is subtle – but it’s that level of precision we were after when testing the capabilities of our visual editor.

I integrated with React devtools – users could point and click on any component and provide the agent with source file and runtime information like props and state. I did that by forking the React monorepo, where the devtools-core module lives. I shoehorned the devtools client into the extension layer of IDE, and injected the backend into a browser view via a reverse proxy.

By injecting another small script that sent navigation events, I made sure the devtools socket reconnected when the user changed route. This also allowed for a nice slight of hand, showing the user’s dev server URL in the address bar, instead of the proxy’s.

Extension and AgentFarm

The first (brief) pivot we did was porting our agent to the VSCode extensions marketplace. I created the extension’s scaffolding, pipeline and branding in two days, allowing the team to quickly launch it.

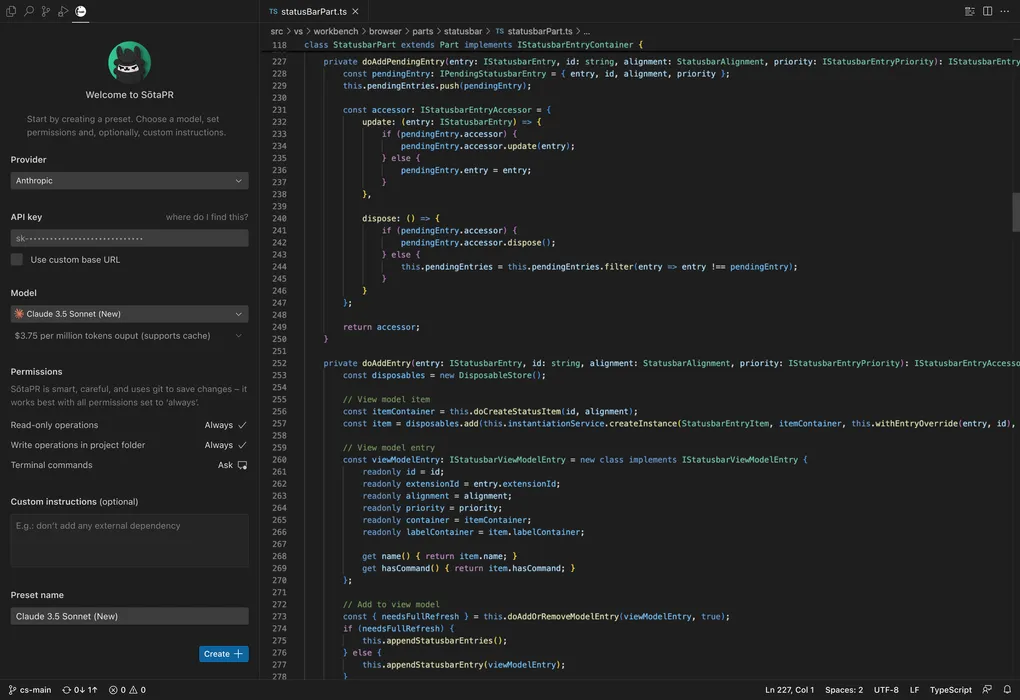

Onboarding view.

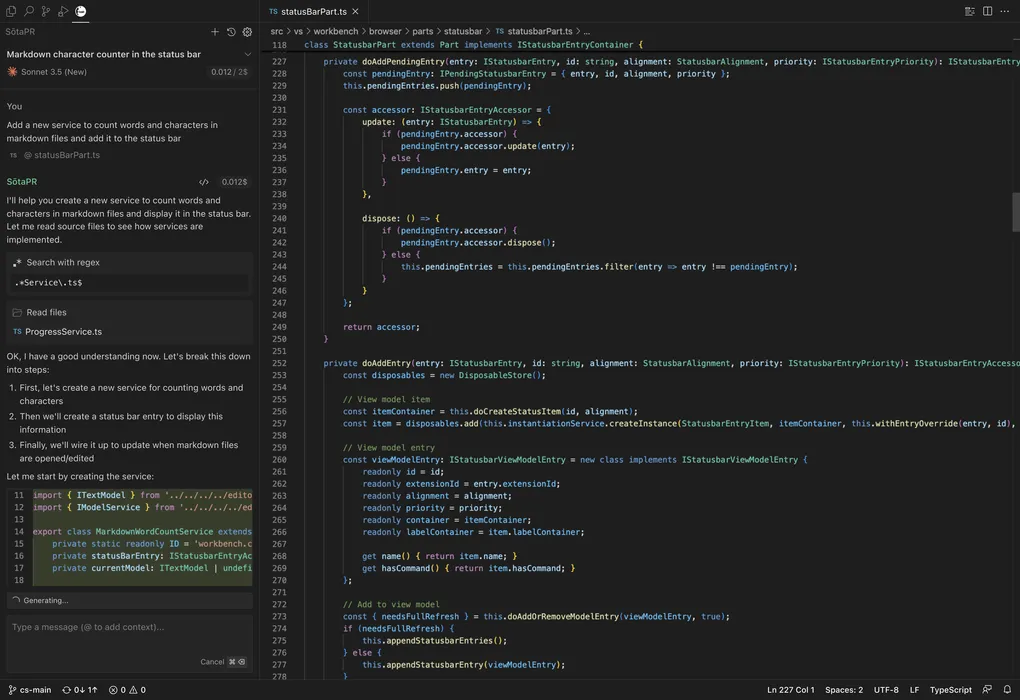

The agent is busy on a rather involved task that required searching and reading through the codebase.

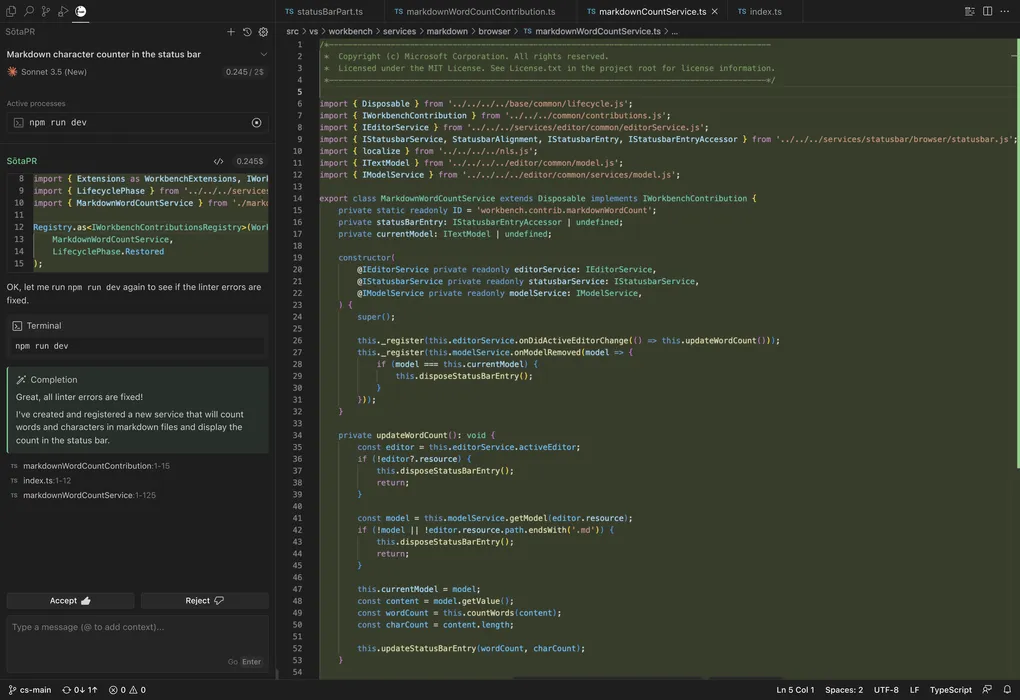

The agent iterates on linter errors and uses the terminal to validate its work.

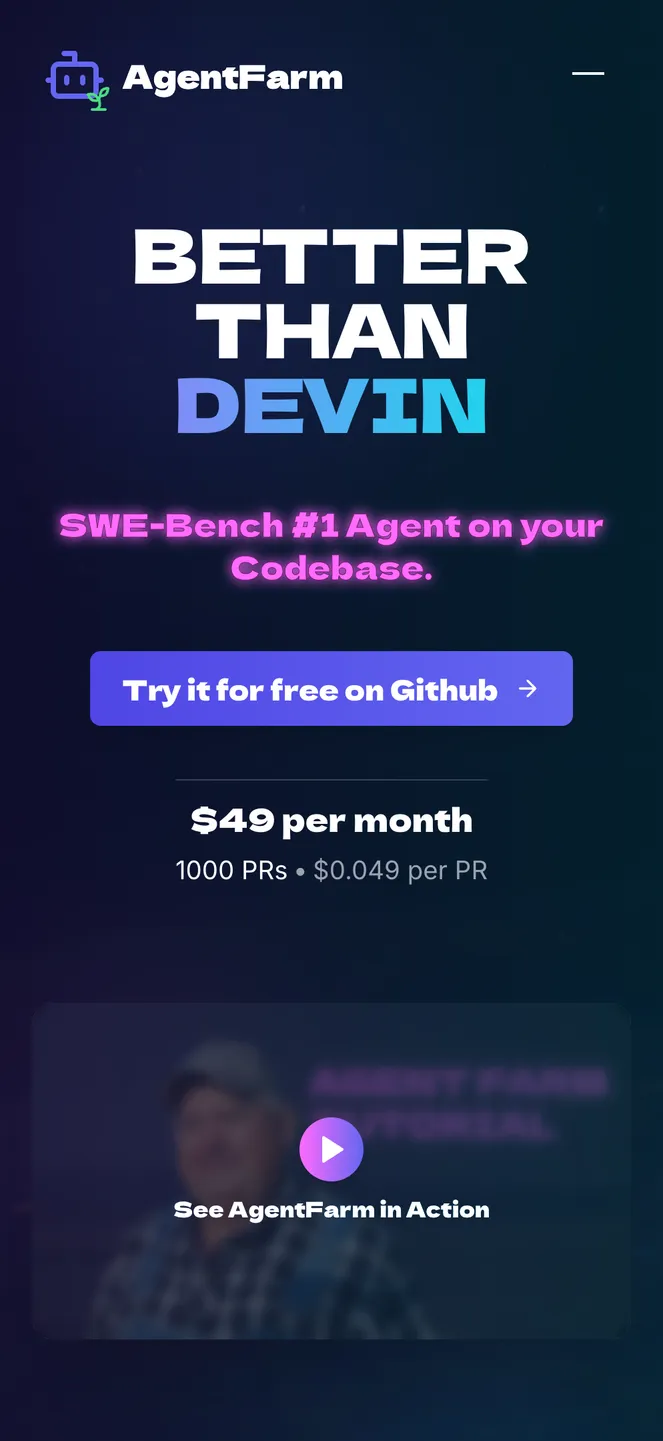

The second (a bit more desperate) pivot was AgentFarm – an agents-as-a-service working on GitHub issues. We sold an internal productivity tool basically as-is. We piggybacked our Github PRs for our UX, embraced an ironic tone, and just shipped.

AgentFarm used from a mobile device. It autonomously re-writes its source code to support the new Sonnet 3.7 model.

I co-led this challenging pivot by handling go-to-market. Within two days, I rebranded, set up ad campaigns with an automated sales funnel and completed sales calls. In the short month we operated, users merged about 800 GitHub issues.

Take aways

I will keep them nice and short. They may seem obvious to some of you, but they bear repeating:

- Ship early, fast, and often.

- There is no alternative to finding a solution to a problem. Address problems early.

- Be confident, speak up.

- Don’t open Figma ‘till series A (depending on project and team size) .